What if you could tell Facebook which ads you like and let YouTube know about your love of 90s California hip-hop? A truly personalized algorithm… win-win. CTRLZ wanted to explore this algorithmic utopia.

At first everything was fine. You got to know each other, he surprised you with daring and unexpected suggestions. Then it became routine. The more he got to know you, the less risk he took. The same things, ad nauseam. It was too much, it was time to take things in hand.

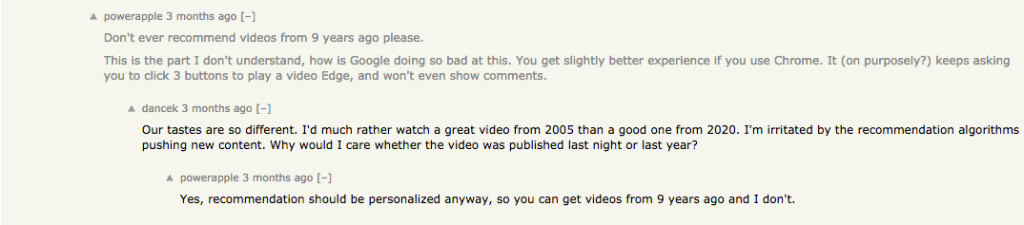

He is your recommendation algorithm. And you’re not the only one who’s tired of him. On the forums, a question is constantly being asked: why does Youtube keep recommending songs that we already know by heart? Or to serve us hits from the 90s while we are tuned in to the latest releases?

“My biggest problem with Youtube recommendations, or any service like that (Spotify, Amazon, etc.), is that they don’t understand what aspects of the content I like”, laments one user on the Y combinator forum. “Sometimes it works because I imagine I’m looking for the same things as most other people. Other times, I can watch a one-hour video that will decipher a topic in detail and Youtube will offer me for more than a week to watch popular and rather superficial five-minute videos. There’s nothing wrong with these videos, they’re pretty well done, but that’s not what I’m looking for”, he continues. Others suggest solutions: “Maybe a ‘this is cool, but enough for now’ button? We could keep a category we like but lower the frequency of recommendations for a while”.

Don’t touch my algo

Chris Lovejoy is a data scientist. He decided to tackle the problem head on and created his own algorithm. He explains his approach in a post on his blog:

“I love watching YouTube videos that improve my life in some tangible way. Unfortunately, the YouTube algorithm doesn’t agree. It likes to feed me clickbait and other garbage”

He then developed his own quality criteria (among others, the ratio between the popularity of the channel and the popularity of the video) and set up a system to receive recommendations by email rather than having to scroll. In this way, he discovers videos that he considers interesting and that are usually quite low in the YouTube recommendations. Lovejoy has also made his code freely available on GitHub.

Others, like Antoine, 35 years old and a fan of rock and experimental music, take care of their algorithm by jealously guarding it:

“I absolutely do not let anyone touch my YouTube and Spotify accounts to avoid picking up shit”.

His girlfriend has similar tastes, “but not exactly,” he says. “She also listens to recent stuff that I wouldn’t be able to classify. Neo-bobo stuff”. Enough to create trench warfare:

“On the Apple TV, I have to fight to get my girlfriend to open a guest YouTube session. Otherwise I end up with crappy suggestions and it’s a real drag. She doesn’t understand because she doesn’t use YouTube as intensively as I do”.

Children also tend to ruin its algorithm. And there’s worse: family parties. After Christmas, when he had left videos of fireplaces running, it took a few days to get back to a suggestions page that was free of such content.

Others, on the other hand, invite as many different opinions as possible to their accounts to encourage diversity. “As a result, my algorithm makes no sense”, rejoices 30 year old Nils.

No one really knows how to influence recommendation algorithms, but many are trying. Among the tips gleaned from the Internet to rekindle the flame: delete your history and be more proactive in your listening – discovery leads to discovery. This is what Pierre Lapin calls “beefing up” his algorithm. In an article immersed in Vincent Manilève’s alternative TikTok, he advises his comrade to get out of the “Vanilla TikTok” and dive into the “Deep TikTok”. Liking what you like, checking out the profiles of interesting creators, sharing the least mainstream content, indicating when you’re not interested and, above all, scrolling and scrolling again… This is how he described his approach when we talked to him:

“I don’t know if it’s really manipulation but rather taking back our responsibility as viewers. Your algorithm is kind of a mirror of your consumption”.

Regaining Control?

Imagine a world where you could tell YouTube that you don’t like music that’s too mainstream, but that you are fond of old school music. Imagine a world where you could let Facebook, which has long since figured out that you’re in the middle of a move, know that you do indeed enjoy furniture ads and that it’s welcome to offer you more. Wouldn’t that be… wonderful?

Wonderful, and probably naive, as Guillaume Chaslot, founder of the AlgoTransparency organisation and former Google employee in charge of the YouTube recommendation algorithm, explains:

“The problem is that the AI [of Youtube] isn’t built to help you get what you want — it’s built to get you addicted. Recommendations were designed to waste your time”

However, to increase your time spent on the platform, extreme content is better than quality content.

An observation shared by Chris Lovejoy, the developer and inventor of an alternative recommendation algorithm. Interested in his project, representatives of YouTube got in touch with him. “They explained their algorithm to me more and they take into account a lot more metrics than I did in my project”, he tells CTRLZ. And adds:

“For example, if someone comes back the next day or leaves a positive comment after viewing. In contrast, the focus is always to increase views and engagement. I don’t think that is necessarily incompatible with giving us what we want, but there is no complete alignment of the two. This could be detrimental to the number of views / engagement since some people would probably watch less videos”.

Lovejoy now avoids Youtube and has an array of extensions to block recommendations.

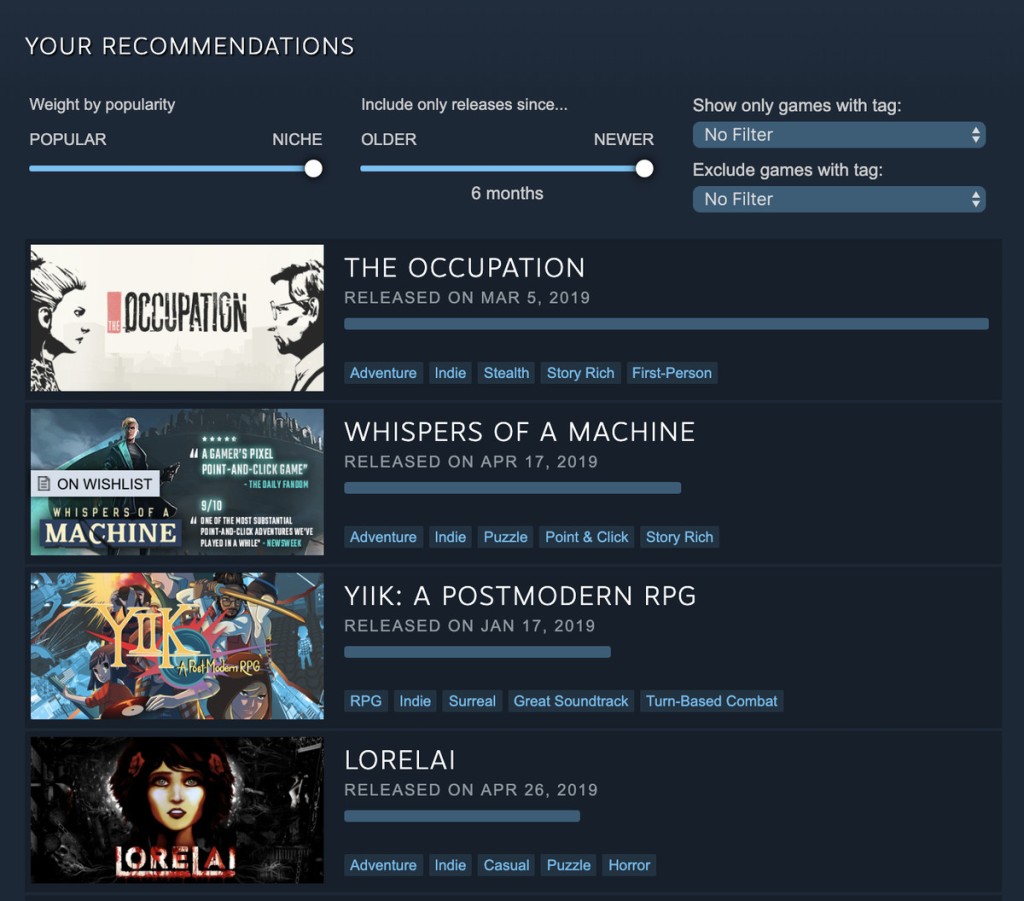

However, some interactive initiatives are emerging. Since 2019, on the video game distribution platform Steam, for example, users can choose whether they prefer mainstream or niche games, old or new. These filters are superimposed on algorithmic recommendations based on consumption behaviour similar to that of the user. A user-friendly feature that was put in place to appease independent developers with whom the platform had had some problems, according to the American magazine, The Verge.

Adopt an algorithm

While US platforms are still struggling to empower the user, we do know where to go. Head to Flint and robot school to adopt an algorithm to train. Flint and robot school are watchdog algorithms created in 2017 to address the rise of misinformation. Benoit Raphael, co-founder of Flint, details the process:

“The news chaos comes mostly from Google and Facebook algorithms. Their algorithms are optimized to promote growth and therefore the time spent in front of the page and to slightly modify the behavior of people”.

According to this media professional, to counter a machine, you need another machine: “It has to be a machine that everyone can use to regain control”.

Thus was born Flint, a media monitoring robot that each reader trains and customises by clicking on the articles they like and indicating those they don’t like. The algorithm is created to suggest articles of equivalent quality to those liked and to keep an element of surprise in order to take the reader out of their information bubble. Like Pierre Lapin, Benoît Raphaël believes in individual responsibility, at least in part, for our consumption of content:

“It’s thought provoking. Taking back control of the algorithm also means understanding its relationship and taking responsibility for it. The result of Flint is the result of our training: if we don’t ask ourselves questions, we will get the robot we deserve”

For Facebook, however, he points out, it’s different because the platform works to slightly modify your behaviour.

The paid version of his tool allows you to enter the Robot School, a real training room for small specialised algorithms, where you can provide your robots with articles you like and access educational resources to understand the method. To overcome the black box phenomenon, the idea that not all decisions made by AI are necessarily explainable, Benoit Raphael and his team are working on the relationship interface between the user and the AI. The robot is thus able to give itself a comprehension score to say whether it understands your expectations. It can also give you the available content according to your criteria, which allows you to widen (or not) your search:

“To increase the quality of what we do, we must increase the level of requirements of users. This is part of a movement to fully understand algorithms, to eventually disengage from them or to use them with caution and diversify the sources of information”.

They are now developing different models, such as an algorithm that would do the opposite of what you ask for in order to send you content that is furthest from yourself.

Twitter’s Algorithm Library

Will we have control over the algorithms that govern our access to information tomorrow? Benoît Raphaël is convinced:

“The first phase of recommendation algorithms boomed with web 2.0, the interactive web. It allowed everyone to have a very good visibility. Google and Facebook created these ranking algorithms to give the same visibility to people as to traditional media. Over the past five years, we have become aware of the perverse effects and sometimes dramatic biases, particularly for democracy. Today we are aware that we need either more transparency, or more sincerity, or to give a little more control back to the users”

Just as users have changed the food industry, which has turned en masse to organic products for industrial production, Benoît Raphaël thinks that we could see the same movement for organic algorithms with guaranteed traceability.

In a sign that this vision may not be wishful thinking, Twitter founder Jack Dorsey detailed to a group of investors in February his vision for a decentralised social network called Bluesky. He imagines a library of recommendation algorithms from which users could draw. A sort of “app-store of recommendation algorithms”, he explained. On March 24, ahead of the debates on ‘section 230’, a fundamental piece of legislation that allows participatory sites not to be held responsible for published content (under certain conditions), he added:

“We believe that people should have transparency or meaningful control over the algorithms that affect them. We recognize that we can do more to provide algorithmic transparency, fair machine learning, and controls that empower people. The machine learning teams at Twitter are studying techniques and developing a roadmap to ensure our present and future algorithmic models uphold a high standard when it comes to transparency and fairness”.

While waiting for this era of the citizen algorithm, you can always apply the scientific method to commercial algos: make hypotheses, make comparisons. “You can create two profiles, one Chrome and one Firefox, and see how they react to your different interactions”, advise Gilles Tredan and Erwan Le Merrer, specialists in algorithmic black boxes. In the worst case, this is your backup account ready for your Lou Bega parties!

GOOD LINKS CTRLZ recommends the initiative of dev Tomo Kihara, TheirTube. A site that allows you to see the Youtube of others and more precisely that of 6 profiles: fruitarian, prepper (survivalist), liberal, conservative, conspiratorial and climate-denier. The recommendations proposed for each of the profiles are made up from the histories of each persona (also visible).

Leave a comment